This paper focuses on the challenge of incrementally and interactively discovering previously unknown axioms governing domain dynamics, and describes an architecture that integrates declarative programming and relational reinforcement learning to address this challenge. Robots assisting humans in complex domains need the ability to represent, reason with, and learn from, different descriptions of incomplete domain knowledge and uncertainty. We motivate and demonstrate the whole of our methodology in the area of microbiome research, where network analysis is emerging as the standard approach for capturing the interconnectedness of microbial taxa across both time and space. Moreover, kernels provide a principled way to integrate data of mixed types. Our methodology exploits the theory of Gaussian processes and naturally requires the use of a kernel, which we obtain by modifying the well-known Hamming distance. In this paper, we propose a Bayesian modeling framework that provides a unified approach to binary classification, anomaly detection, and survival analysis with network inputs. However, with networks being non-Euclidean in nature, how best to incorporate networks into standard modeling tasks is not obvious. And these networks, in turn, are being looked to as potentially powerful features to be used in modeling. Increasingly, anywhere from several to thousands of networks can be created from brain imaging, gene co-expression data, or microbiome measurements. While the study of a single network is well-established, technological advances now allow for the collection of multiple networks with relative ease. We also discuss briefly some related research areas and point to some potential promising research directions.

Based on this scheme, we summarize and analyze recent work related to interpretable RL with an emphasis on papers published in the past 10 years.

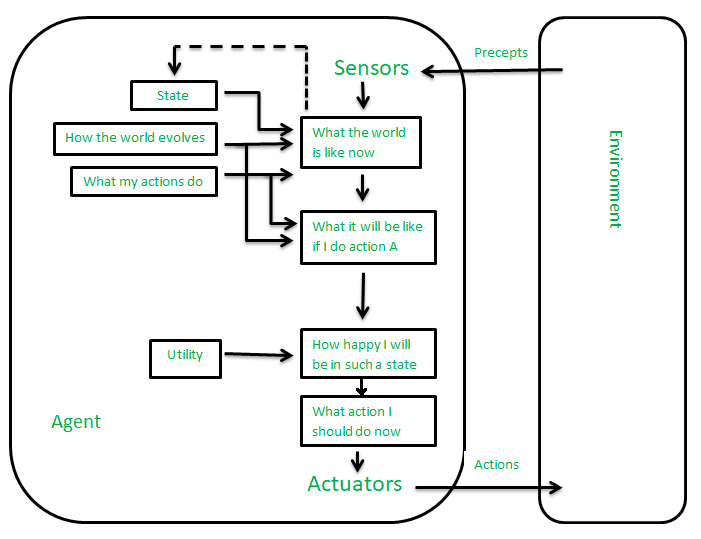

In particular, we argue that interpretable RL may embrace different facets: interpretable inputs, interpretable (transition/reward) models, and interpretable decision-making. To that aim, we distinguish interpretability (as a property of a model) and explainability (as a post-hoc operation, with the intervention of a proxy) and discuss them in the context of RL with an emphasis on the former notion. This survey provides an overview of various approaches to achieve higher interpretability in reinforcement learning (RL). In such contexts, a learned policy needs for instance to be interpretable, so that it can be inspected before any deployment (e.g., for safety and verifiability reasons). Although deep reinforcement learning has become a promising machine learning approach for sequential decision-making problems, it is still not mature enough for high-stake domains such as autonomous driving or medical applications.

0 kommentar(er)

0 kommentar(er)